Vision Inspection Using Machine Learning/Artificial Intelligence

Pharmaceutical companies rely on automated vision inspection (AVI) systems to help ensure product safety. Although these systems overcome challenges associated with manual inspection, they can be hindered by limitations in their programming—if the system is programmed to consider every variation in inspection conditions, it is likely to falsely identify defects in safe products. This article discusses a project that explored how artificial intelligence (AI) and machine learning (ML) methods can be used as business tools to learn about the ejected product images, understand the root causes for the false ejects, trend the true defects, and take corrective actions.

Pharmaceutical companies rely on automated vision inspection (AVI) systems to help ensure product safety. Although these systems overcome challenges associated with manual inspection, they can be hindered by limitations in their programming—if the system is programmed to consider every variation in inspection conditions, it is likely to falsely identify defects in safe products. This article discusses a project that explored how artificial intelligence (AI) and machine learning (ML) methods can be used as business tools to learn about the ejected product images, understand the root causes for the false ejects, trend the true defects, and take corrective actions.

The United States Pharmacopeia (USP) Convention requires visual inspection of all products intended for parenteral administration.1, 2 Pharmaceutical products must be essentially or practically free of observable foreign and particulate matters. Additionally, any product, container, or closure defects potentially impacting the product or patient must be detected and ejected.

Although 100% manual inspection is the standard for detection of visible particulate matter and other defects, it is a slow, labor-intensive process, and not a match for high-volume production. Therefore, to meet visual inspection requirements, pharma companies have turned to automated vision inspection systems, which use arrays of cameras paired with image processing (vision) software to automatically inspect sealed containers using the image visuals. The high throughput of these machines is achieved by machine vision engineers who develop, qualify, and implement the vision recipes on automated vision inspection machines for the relevant products.

The automated vision inspection method of inspecting products in containers is a well-established process; however, it is not yet perfect when it comes to handling variations in lighting conditions, physical containers, product appearance, environmental factors, camera position, and other contributors that may change continuously. Generally, automated vision inspection systems come with vision software that uses heuristic/rule-based methods to differentiate good products from defective ones. Defects range from missing components of the final product, such as caps and stoppers, to purely visual ones, such as dirt or scratches on the outside of the container, and critical defects like cracks and particles. The challenge is that heuristic methods cannot be programmed to account for every variation. It is nearly impossible to tune machines to cover all variations while ensuring true defect detection; the result is false ejects. To address this challenge, we investigated how AI and machine learning technologies can help overcome automated vision inspection system limitations.

Project Inception

Before any data analytics work began, our team focused on building a good data set that included drug product images and process data. Because the machines inspecting the lyophilized products were already designed to retain all ejected images, the team used those data to develop its first AI/ML models. Initially, the team selected a small set of cameras that are used to detect specific defect categories to focus their modeling efforts.

To confirm, classify, and monitor true defect occurrences, the vision team developed a data collection method to pull and organize all the eject im-ages that the machines use for decision-making. These images were from both the machines and the manual inspection of the automated vision inspection eject population, which is done to confirm, classify, and monitor defects via process control limits. Once the data repository was created, the team created dashboards that gave near real-time eject insights about which components and associated suppliers were the potential source of the ejects.

As part of the exploratory phase for using the AI/ML for automated vision inspection, our team conducted two proof-of-concept experiments:

- Experiment 1 tested the hypothesis that ML/AI can help vision system experts tune automated vision inspection machines by identifying and sorting false ejects from large image data sets.

- Experiment 2 tested the hypothesis that ML/AI can facilitate a streaming analytics platform for the automated vision inspection process. This streaming analytics platform will support the vision system experts with rapid identification and remediation of both true and false ejects from the upstream drug product unit operations.

For both experiments, the vision inspection team collaborated with the data science team to explore whether advanced techniques could be used to further improve the capabilities of inspection. Immediate value was seen in developing the AI/ML algorithms to classify the defect images. The team then set out to develop a prototype convolutional neural network (CNN) to test the ability of AI/ML models to identify true versus false ejects.

The first step was to assemble data sets of relevant images to develop the convolutional neural network model. The vision inspection team began labeling ejected images that had been saved from lyophilized vial automated vision inspection machines. After the eject- and acceptable-image sets had been assembled, the team collaborated with data science team to develop a deep learning model that could classify the images. Using Python, OpenCV, Keras, and TensorFlow, the team developed a RESNET 50 convolutional neural network architecture for classifying the images.

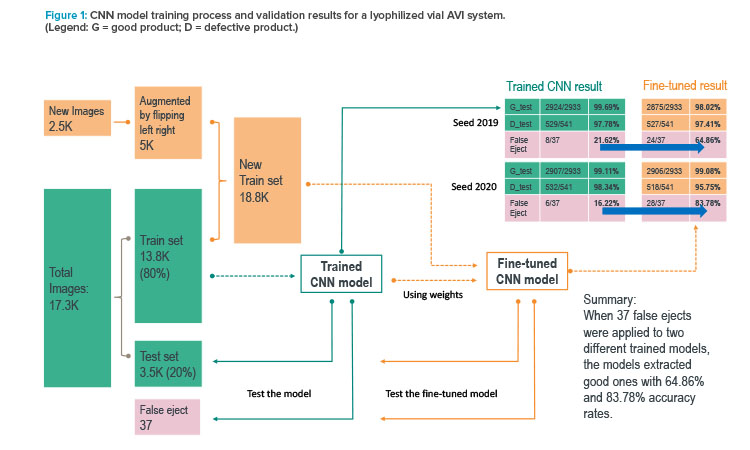

Figure 1 presents the performances of two sample models in multiple validation tests that evaluated how closely each deep learning model could replicate the automated vision recipe developed by the vision engineers for cameras. The team used a binary classification model to separate good units (G) from defective units (D).

In Figure 1, the teal-colored path and boundaries mark the first (initial) trained model. When this model was fed unseen 37 false ejects from the current automated vision inspection system, it was only able to identify eight of them as good. The key reason for the low performance was that the training data set did not contain the hard-to-classify variations.

The team then augmented the training data sets with more variation (refer to the yellow and orange parts of Figure 1). The fine-tuned model showed much improved potential to reduce the number of false ejects. For example, when 37 false-eject images (previously unseen by the model) were input in the model for inference, the model was able to identify 24 (65%) as good units.

The results from the prototype convolutional neural network were extremely promising. In developing the model, the team used 2,500 images that had been manually inspected to determine whether they were ejects or acceptable product, plus 17,300 images that were not manually labeled but used the current automated vision inspection system’s classification. Because the current system was shown to eject acceptable units, the training set of images contained units that would be acceptable by manual classification. The current system’s overly cautious nature of ejecting good product was learned by the convolutional neural network because the neural net is dependent on having correctly labeled data to train on. The ability of the neural net to extract the features of defective images, while also improving the detection of acceptable products, showed the team the potential of using deep learning for automated vision inspection.

If a convolutional neural network could be developed to identify signals buried within images, it could reveal insights into the process that cannot be seen by humans. Given the breadth of analysis possible with AI/ML, existing vision recipes could potentially be drastically improved by incorporating models developed from large classified sets of images instead of relying on the traditional inspection window techniques currently in use. Using a large set of images, the convolutional neural network model was trained to provide a highly accurate classification of images that was not possible using a traditional rule-based automated vision inspection system.

The outcomes of both experiments demonstrated the ability of AI/ML learning models to provide a rapid and thorough root-cause analysis of eject rates, leading to eject-rate reduction. It should be noted that none of the images used in these experiments were from drug product units that were released for human use.

Proof of Value

Given the successful outcome of the initial proof-of-concept experiments, the automated vision inspection project team launched a formal project with an expanded scope to show proof of value. For this project, the team adopted an agile delivery approach that focused on specific user stories to determine the user requirements for a minimum viable product. As part of this process, the team held workshops to bring together different user personnel and capture their user stories to make sure the solution addresses their needs.

The team selected three use cases and focused on two goals for this proof-of-value project:

Goal 1: To create an image catalogue and contextualize data into a centralized data repository to (a) enhance intra-batch troubleshooting and decision-making and automated vision inspection machine tuning through a deeper understanding the ejected images; and (b) provide enterprise data for the component performance associated with ejected units that will enhance negotiations between procurement and component suppliers

Goal 2: To use ML/AI to determine trends and generate insights about root causes of false ejects in drug product unit operations, leading to reduced false-eject rates

Use Case 1

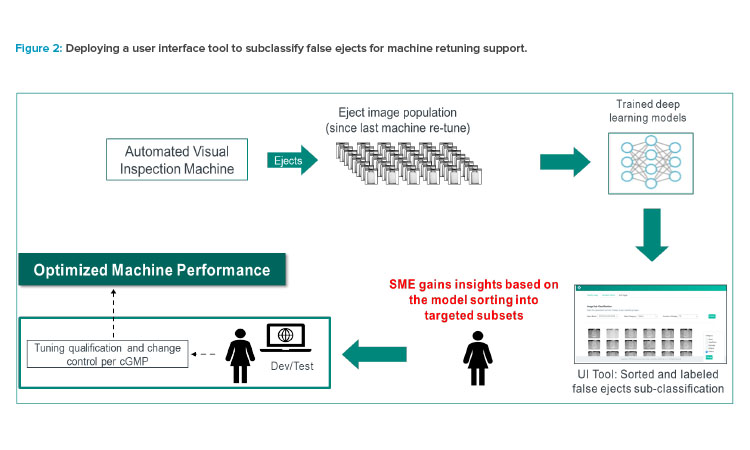

The first use case considered a user interface tool to subclassify false ejects for machine retuning support. Figure 2 shows how AI and machine learning can be used as business tools to sift through the pool of ejected images and group them into different defect and false-eject categories. A vision inspection team member can then quickly review specific categories and follow the cGMP process to retune the vision machines.

Use Case 2

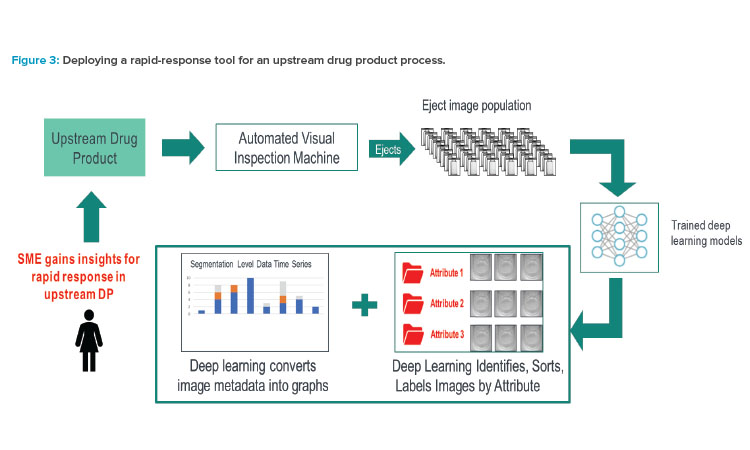

The second use case involved a rapid-response tool for an upstream drug product process. Figure 3 shows how an AI/ML–trained model can be used as a business tool to sift through the pool of ejected images and classify them by different attributes (defect and false-eject categories). An upstream process team can then compare the ejects over multiple batches, analyze the defect trends, perform root-cause analysis, and follow the cGMP process to make changes to address the true defects or false ejects.

Use Case 3

When using automated vision inspection systems to inspect liquid products, differentiating between nondefect bubbles and true particulate matter in solution is a common challenge. In the third use case, the team explored the abilities of AI/ML models to improve the new liquid vial inspection system.

Whereas capturing images from the automated vision inspection machines in the lyophilized vial manufacturing area was relatively simple, the team found it difficult to get images to develop the convolutional neural network model for liquid vial inspection. Specifically, limitations to the image-saving capabilities of the first vision systems used by the team made it challenging to collect a sufficient number of images to encapsulate all the variance needed to effectively predict whether a unit was defective or acceptable.

Once the images were collected from the liquid product camera stations, the team decided to try and improve particle versus bubble classification using morphological image-processing methods instead of a convolutional neural network. The method used information on pixel colors within the image to form the basis for detecting a bubble or particle. The method also captured the size, location, and number of bubbles/particulates found in the vial. Figure 4 shows the techniques used to distinguish between a particle and a bubble.

Once it was possible to distinguish between the bubbles and particulates in the images, a user interface was developed to execute the algorithms in the background. The team built a dashboard showing the trends for bubble counts versus time. The goal was to identify a step change in the process that caused bubbles to be generated. However, once the tool was developed, the vision inspection group saw that the number of bubbles the tool was identifying was much higher than the number bubbles historically seen. The data the team presented were useful for showing general bubble trends, and the ability to differentiate images of bubbles and particles with high throughput provided new insight into the process for the vision team. Therefore, the team refocused their efforts to use ML to develop automated vision inspection solutions.

Lessons Learned

Because of technical limitations, some automated vision inspection systems do not retain images permanently on the computer where the vision software is running. Acquiring and saving (ingesting) the ejected-image data set is critical to building AI-driven advanced analytics insights. We recommend that pharmaceutical companies invest in automated vision inspection machines that are capable of retaining eject images on an ongoing basis.

Other lessons learned include:

- It is important to invest in the IT infrastructure to build a scalable solution that can ingest, pre-process, train, test, and deploy large sets of images for near real-time insights, model inference, and image query.

- The current approach to maintaining and improving performance with automated vision inspection machines is effective; however, continuous improvement opportunities exist. There are opportunities to use AI/ML models as business tools is to learn about ejection rates, root causes of defects, and defect trends. It may also be possible to facilitate rapid response/adjustments to the upstream process issues and to verify the effectiveness of corrective and preventive actions.

- Advanced analytics and AI (deep learning) applications in automated vision inspection machines have the potential to improve productivity in the factories of the future. It is imperative that automated vision inspection vendors seek to embed these capabilities in their future products.

- A speed-to-value, agile delivery model offers incremental value to businesses while keeping morale high during the adoption of transformational changes.

Next Steps

The automated vision inspection project team is continuing the process of developing models to identify all known defects for both lyophilized and liquid products. The team is also building an automated image-ingestion capability to go along with the automated vision inspection systems within our company’s manufacturing network. The team’s success so far in the project has been directly correlated with its ability to retain and label images to build the models. If a convolutional neural network model is going to account for the variation in each process and product, large numbers of images for every known defect in that manufacturing process/product are needed. Develop-ing the data sets to build accurate models cannot be done without automated image saving and contextualization, as well as a method to tag each image with metadata that identifies associated site, product, inspection machine, and camera numbers for deeper-level insights.

Finally, the team would welcome collaboration with other pharmaceutical companies and automated vision inspection system manufacturers. Working with other large manufacturers provides opportunities to develop best practices and share the lessons learned from industrializing AI/ML (deep learning models) for visual inspection, helping the entire industry move forward.